Navigable Space Construction and Exploration

Navigation with onboard sensors is an essential function for robots. We develop navigable-space construction methods from perception inputs (e.g., cameras) and also local navigation motion planing approaches in complex, unstructured environments.

Navigable Space Segmentation for Autonomous Visual Navigation:

A crucial capability for mobile robots to navigate in unknown environments is to construct obstacle-free space where the robot could move without collision. Roboticists have been developing methods for detecting such free space with the ray tracing of LiDAR beams to build occupancy maps in 2D or 3D space. Mapping methods with LiDAR require processing of large point cloud data, especially when a high-resolution LiDAR is used. As a much less expensive alternative, cameras have also been widely used for free space detection by leveraging deep neural networks (DNNs) to perform multi-class or binary-class segmentation of images.

Left: We use the Intel T265 for localization and the Intel RealSense D435i for computing the surface normal images which are the inputs to our perception model.. Right: Different visual information may provide information on different aspects of the surrounding environment. .

However, most existing DNN-based methods are built on a supervised-learning paradigm and rely on annotated datasets. The datasets usually contain a large amount of pixel-level annotated segmented images, which are prohibitively expensive and time-consuming to obtain for robotic applications in outdoor environments. To overcome limitations of fully-supervised learning, we have been developing a new deep model based on variational auto-encoders. We target a representation learning-based framework to enable robots to learn navigable space segmentation in an unsupervised manner, and we aim at learning a polyline representation that compactly outlines the desired navigable space boundary. This is different from prevalent segmentation techniques which heavily rely on supervised learning strategies and typically demand immense pixel-level annotated images. We also developed a visual receding horizon planning method that uses the learned navigable space and a Scaled Euclidean Distance Field (SEDF) to achieve autonomous navigation without an explicit map.

We deploy our robot in a cluttered environment and the robot can efficiently avoid the obstacles and arrive at a predefined goal without constructing an explicit map.

Navigable Space Construction from Sparse and Noisy Visual SLAM Map Points:

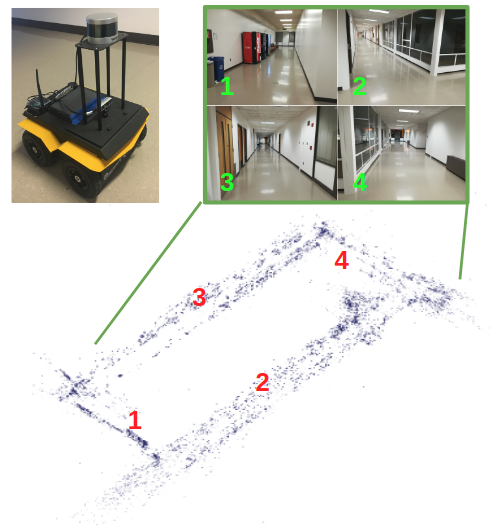

We are also interested in creating navigable space from cluttered point clouds generated by low-end sensors with high sparsity and noise.

Navigable space might be easily identified if the sensors are accurate and with high resolution. However, in many cases only low-quality sensors (e.g., low-end LiDAR or sonar systems) are available due to the constraints of sensor costs or dimensions, and with low-end sensors the environment can only be represented as a set of points (also known as point clouds or map points) that can be extremely sparse and noisy. For example, if the range sensors are noisy and/or the environmental features are barren and poor, the produced map points can be extremely irregular, resulting in significant difficulty for describing 3D objects and planning motion therein. Specifically, there are a couple of challenges: (1) Large noise on point position. Ideally, the map points of a surface (e.g., a wall) should form a plane, but in reality the obtained map points can be scattered randomly in 3D around the surface area, leading to great difficulty for reconstructing a surface. (2) Misleading information. Different from conventional data processing methods where a small number of outliers are viewed as noisy points, in our context the outliers could take a big fraction and cannot be simply discarded. (3) High variation on point density. Due to the sensors’ mechanical limitations such as vertical scanning angle of LiDARs or field of view of cameras, the points may fail to distribute evenly across the map. There might be some places that have dense points while others have too few.

We developed a method to extract navigable space in the "convex" form from a point cloud. Our basic idea is to conservatively extract navigable space by regulating given sparse and noisy map points, the result of which can then be used for subsequent navigation planning and motion control tasks. Our method incrementally seeds and creates local convex regions free of obstacle points along robot's trajectory. Then a dense version of the point cloud is reconstructed through a map point regulation process where the original noisy map points are first projected onto a series of local convex hull surfaces, after which those points falling inside the convex hulls are culled.

(a) The original map point cloud generated by a sparse visual SLAM method. (b) After filtering out outlier (distant) points, the free space is computed. The free space consists of a series of overlapping convex hulls which capture the shape of the free space while excluding any points inside. (c) For better visualization and environmental abstraction, we regulate the original noisy map points by projecting them onto the facets of the convex hulls. (d) The projected point cloud is further refined to be denser and smoother.

Left: details of regulated map points. Right: smoothed map for KITTI dataset.

YouTube link: